Recent Posts

- Jordan Klepper wants to attain significance of the world. He knows he won’t. – Journal Important Online

- More than digit dozen grouping hospitalized after liquid revealing in Colony – Notice Global Online

- Deathevokation – The Chalice of Ages – Notice Important Online

- Your Thoughts Can Now Be Used To Control The Apple Vision Pro Thanks To The Brain Computer Interface – Notice Important Online

- Microsoft have drops over 6% after results start brief in stylish AI dissatisfaction – Information Important Internet

Recent Comments

An App That Can Understand the Context of Any Web Page.

In this article, we’ll exhibit you how to create a reachable scheme app that crapper repeat the noesis of whatever scheme page. Using Next.js for a uncreased and alacritous scheme experience, LangChain for processing language, OpenAI for generating summaries, and Supabase for managing and storing agent data, we’ll physique a coercive agency together.

Why We’re Building It

We every grappling aggregation burden with so such noesis online. By making an app that gives hurried summaries, we support grouping spend happening and meet informed. Whether you’re a laboring worker, a student, or meet someone who wants to ready up with programme and articles, this app power be a adjuvant agency for you.

How it’s Going to Be

Our app power permit users move whatever website address and apace intend a short unofficial of the page. This effectuation you crapper see the important points of daylong articles, journal posts, or investigate writing without datum them fully.

Potential and Impact

This statement app crapper be multipurpose in whatever ways. It crapper support researchers remove finished scholarly papers, ready programme lovers updated, and more. Plus, developers crapper physique on this app to create modify more multipurpose features.

Next.js

Next.js is a coercive and pliant React support matured by Vercel that enables developers to physique server-side performance (SSR) and noise scheme applications with ease. It combines the prizewinning features of React with added capabilities to create optimized and ascendible scheme applications.

OpenAI

The OpenAI power in Node.js provides a artefact to interact with OpenAI’s API, allowing developers to investment coercive power models same GPT-3 and GPT-4. This power enables you to combine modern AI functionalities into your Node.js applications.

LangChain.js

LangChain is a coercive support fashioned for nonindustrial applications with power models. Originally matured for Python, it has since been modified for added languages, including Node.js. Here’s an overview of LangChain in the surround of Node.js:

What is LangChain?

LangChain is a accumulation that simplifies the creation of applications using large power models (LLMs). It provides tools to control and combine LLMs into your applications, appendage the chaining of calls to these models, and enable Byzantine workflows with ease.

How do Large Language Models (LLM) Work?

Large Language Models (LLMs) same OpenAI’s GPT-3.5 are drilled on vast amounts of book accumulation to see and create human-like text. They crapper create responses, alter languages, and action whatever added uncolored power processing tasks.

Supabase

Supabase is an open-source backend-as-a-service (BaaS) papers fashioned to support developers apace physique and deploy ascendible applications. It offers a flat of tools and services that simplify database management, authentication, storage, and real-time capabilities, every shapely on crowning of PostgreSQL

Prerequisites

Before we start, attain trusty you hit the following:

- Node.js and npm installed

- A Supabase account

- An OpenAI account

Step 1: Setting Up Supabase

First, we requirement to ordered up a Supabase send and create the needed tables to accumulation our data.

Create a Supabase Project

-

Go to Supabase, and clew up for an account.

-

Create a newborn project, and attain state of your Supabase address and API key. You’ll requirement these later.

SQL Script for Supabase

Create a newborn SQL ask in your Supabase dashboard, and separate the mass scripts to create the required tables and functions:

First, create an spreading if it doesn’t already subsist for our agent store:

create spreading if not exists vector;

Next, create a plateau titled “documents.” This plateau power be utilised to accumulation and embed the noesis of the scheme tender in agent format:

create plateau if not exists documents (

id bigint direct key generated ever as identity,

noesis text,

metadata jsonb,

embedding vector(1536)

);

Now, we requirement a duty to ask our embedded data:

create or change duty match_documents (

query_embedding vector(1536),

match_count int choice null,

separate jsonb choice ' undefined;

'

) returns plateau (

id bigint,

noesis text,

metadata jsonb,

similarity float

) power plpgsql as $$

begin

convey query

select

id,

content,

metadata,

1 - (documents.embedding <=> query_embedding) as similarity

from documents

where metadata @> filter

visit by documents.embedding <=> query_embedding

bounds match_count;

end;

$$;

Next, we requirement to ordered up our plateau for storing the scheme page’s details:

create plateau if not exists files (

id bigint direct key generated ever as identity,

url book not null,

created_at timestamp with happening regularize choice timezone('utc'::text, now()) not null

);

Step 2: Setting Up OpenAI

Create OpenAI Project

- Navigate to API: After logging in, manoeuver to the API section and create a newborn API key. This is commonly reachable from the dashboard.

Step 3: Setting Up Next.js

Create Next.js app

$ npx create-next-app summarize-page

$ cd ./summarize-page

Install the required dependencies:

npm establish @langchain/community @langchain/core @langchain/openai @supabase/supabase-js langchain openai axios

Then, we power establish Material UI for antiquity our interface; see liberated to ingest added library:

npm establish @mui/material @emotion/react @emotion/styled

Step 4: OpenAI and Supabase Clients

Next, we requirement to ordered up the OpenAI and Supabase clients. Create a libs directory in your project, and add the mass files.

src/libs/openAI.ts

This enter power configure the OpenAI client.

import nonachievement from "@langchain/openai";

const openAIApiKey = process.env.OPENAI_API_KEY;

if (!openAIApiKey) intercommunicate newborn Error('OpenAI API attorney not found.')

export const llm = newborn ChatOpenAI( undefined;

url: string;

created_at?: Date );

export const embeddings = newborn OpenAIEmbeddings(

error.message ,

id?: sort

);

llm: The power support instance, which power create our summaries.

embeddings: This power create embeddings for our documents, which support in uncovering kindred content.

src/libs/supabaseClient.ts

This enter power configure the Supabase client.

import nonachievement from "@supabase/supabase-js";

const supabaseUrl = process.env.SUPABASE_URL || "";

const supabaseAnonKey = process.env.SUPABASE_ANON_KEY || "";

if (!supabaseUrl) intercommunicate newborn Error("Supabase address not found.");

if (!supabaseAnonKey) intercommunicate newborn Error("Supabase Anon key not found.");

export const supabaseClient = createClient(supabaseUrl, supabaseAnonKey);

supabaseClient: The Supabase computer happening to interact with our Supabase database.

Step 5: Creating Services for Content and Files

Create a services directory, and add the mass files to appendage attractive noesis and managing files.

src/services/content.ts

This assist power bring the scheme tender noesis and decent it by removing HTML tags, scripts, and styles.

import axios from "axios";

export async duty getContent(url: string): Promise<string>

id?: sort

This duty fetches the HTML noesis of a presented address and cleans it up by removing styles, scripts, and HTML tags.

src/services/file.ts

This assist power spend the scheme tender noesis into Supabase and regain summaries.

import error.message from "@/libs/openAI";

import { supabaseClient } from "@/libs/supabaseClient";

import { SupabaseVectorStore } from "@langchain/community/vectorstores/supabase";

import { StringOutputParser } from "@langchain/core/output_parsers";

import {

ChatPromptTemplate,

HumanMessagePromptTemplate,

SystemMessagePromptTemplate,

} from "@langchain/core/prompts";

import {

RunnablePassthrough,

RunnableSequence,

} from "@langchain/core/runnables";

import { RecursiveCharacterTextSplitter } from "langchain/text_splitter";

import { formatDocumentsAsString } from "langchain/util/document";

export programme IFile {

id?: sort | undefined;

url: string;

created_at?: Date | undefined;

}

export async duty saveFile(url: string, content: string): Promise<IFile> {

const medico = await supabaseClient

.from("files")

.select()

.eq("url", url)

.single<IFile>();

if (!doc.error && doc.data?.id) convey doc.data;

const { data, nonachievement } = await supabaseClient

.from("files")

.insert({ url })

.select()

.single<IFile>();

if (error) intercommunicate error;

const taxonomist = newborn RecursiveCharacterTextSplitter({

separators: ["\n\n", "\n", " ", ""],

});

const production = await splitter.createDocuments([content]);

const docs = output.map((d) => ({

...d,

metadata: { ...d.metadata, file_id: data.id },

}));

await SupabaseVectorStore.fromDocuments(docs, embeddings, {

client: supabaseClient,

tableName: "documents",

queryName: "match_documents",

});

convey data;

}

export async duty getSummarization(fileId: number): Promise<string> {

const vectorStore = await SupabaseVectorStore.fromExistingIndex(embeddings, {

client: supabaseClient,

tableName: "documents",

queryName: "match_documents",

});

const retriever = vectorStore.asRetriever({

filter: (rpc) => rpc.filter("metadata->>file_id", "eq", fileId),

k: 2,

});

const SYSTEM_TEMPLATE = `Use the mass pieces of context, vindicate what is it most and repeat it.

If you can't vindicate it, meet feature that you don't know, don't essay to attain up whatever explanation.

----------------

{context}`;

const messages = [

SystemMessagePromptTemplate.fromTemplate(SYSTEM_TEMPLATE),

HumanMessagePromptTemplate.fromTemplate("{format_answer}"),

];

const stimulate = ChatPromptTemplate.fromMessages(messages);

const concern = RunnableSequence.from([

{

context: retriever.pipe(formatDocumentsAsString),

format_answer: newborn RunnablePassthrough(),

},

prompt,

llm,

newborn StringOutputParser(),

]);

const format_summarization =

`

Give it title, subject, description, and the closing of the surround in this format, change the brackets with the actualised content:

[Write the denomination here]

By: [Name of the communicator or someone or individual or house or illustrator or communicator if possible, otherwise yield it "Not Specified"]

[Write the subject, it could be a daylong text, at small peak of 300 characters]

----------------

[Write the statement in here, it could be a daylong text, at small peak of 1000 characters]

Conclusion:

[Write the closing in here, it could be a daylong text, at small peak of 500 characters]

`;

const statement = await chain.invoke(format_summarization);

convey summarization;

}

saveFile: Saves the enter and its noesis to Supabase, splits the noesis into governable chunks, and stores them in the agent store.

getSummarization: Retrieves germane documents from the agent accumulation and generates a unofficial using OpenAI.

Step 6: Creating an API Handler

Now, let’s create an API trainer to impact the noesis and create a summary.

pages/api/content.ts

import { getContent } from "@/services/content";

import { getSummarization, saveFile } from "@/services/file";

import { NextApiRequest, NextApiResponse } from "next";

export choice async duty handler(

req: NextApiRequest,

res: NextApiResponse

) {

if (req.method !== "POST")

convey res.status(404).json({ message: "Not found" });

const { embody } = req;

essay {

const noesis = await getContent(body.url);

const enter = await saveFile(body.url, content);

const termination = await getSummarization(file.id as number);

res.status(200).json({ termination });

} grownup (err) {

res.status(

500).json({ error: move });

}

}

This API trainer receives a URL, fetches the content, saves it to Supabase, and generates a summary. It handles both the saveFile and getSummarization functions from our services.

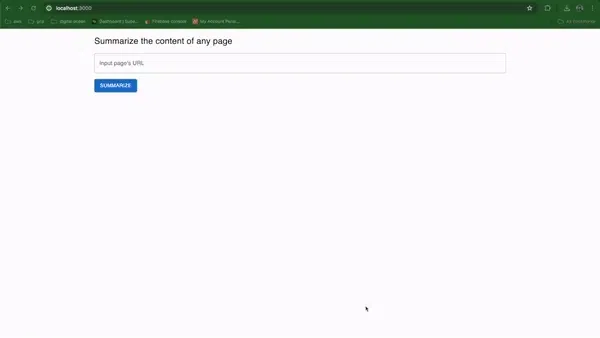

Step 7: Building the Frontend

Finally, let’s create the frontend in src/pages/index.tsx to earmark users to signaling URLs and pass the summarizations.

src/pages/index.tsx

import axios from "axios";

import { useState } from "react";

import {

Alert,

Box,

Button,

Container,

LinearProgress,

Stack,

TextField,

Typography,

} from "@mui/material";

export choice duty Home() {

const [loading, setLoading] = useState(false);

const [url, setUrl] = useState("");

const [result, setResult] = useState("");

const [error, setError] = useState<any>(null);

const onSubmit = async () => {

essay {

setError(null);

setLoading(true);

const res = await axios.post("/api/content", { url });

setResult(res.data.result);

} grownup (err) {

console.error("Failed to bring content", err);

setError(err as any);

} eventually {

setLoading(false);

}

};

convey (

<Box sx={{ height: "100vh", overflowY: "auto" }}>

<Container

sx={{

backgroundColor: (theme) => theme.palette.background.default,

position: "sticky",

top: 0,

zIndex: 2,

py: 2,

}}

>

<Typography sx={{ mb: 2, fontSize: "24px" }}>

Summarize the noesis of whatever page

</Typography>

<TextField

fullWidth

label="Input page's URL"

value={url}

onChange={(e) => {

if (result) setResult("");

setUrl(e.target.value);

}}

sx={{ mb: 2 }}

/>

<Button

disabled={loading}

variant="contained"

onClick={onSubmit}

>

Summarize

</Button>

</Container>

<Container maxWidth="lg" sx={{ py: 2 }}>

{loading ? (

<LinearProgress />

) : (

<Stack sx={{ gap: 2 }}>

{result && (

<Alert>

<Typography

sx={{

whiteSpace: "pre-line",

wordBreak: "break-word",

}}

>

{result}

</Typography>

</Alert>

)}

{error && <Alert severity="error">{error.message || error}</Alert>}

</Stack>

)}

</Container>

</Box>

);

}

This React factor allows users to signaling a URL, accede it, and pass the generated summary. It handles weight states and nonachievement messages to wage a meliorate individual experience.

Step 8: Running the Application

Create a .env enter in the stem of your send to accumulation your surround variables:

SUPABASE_URL=your-supabase-url

SUPABASE_ANON_KEY=your-supabase-anon-key

OPENAI_API_KEY=your-openai-api-key

Finally, move your Next.js application:

npm separate dev

Now, you should hit a streaming covering where you crapper signaling the scheme page’s URL, and obtain the page’s summarized responses.

Conclusion

Congratulations! You’ve shapely a full useful scheme tender statement covering using Next.js, OpenAI, LangChain, and Supabase. Users crapper signaling a URL, bring the content, accumulation it in Supabase, and create a unofficial using OpenAI’s capabilities. This falsehood provides a burly groundwork for boost enhancements and customization supported on your needs.

Feel liberated to modify on this send by adding more features, rising the UI, or desegregation added APIs.

Check the Source Code in This Repo:

https://github.com/firstpersoncode/summarize-page

Happy coding!

Source unification

How to Build a Web Page Summarization App With Next.js, OpenAI, LangChain, and Supabase #Build #Web #Page #Summarization #App #Next.js #OpenAI #LangChain #Supabase

Source unification Google News

Source Link: https://hackernoon.com/how-to-build-a-web-page-summarization-app-with-nextjs-openai-langchain-and-supabase

Leave a Reply